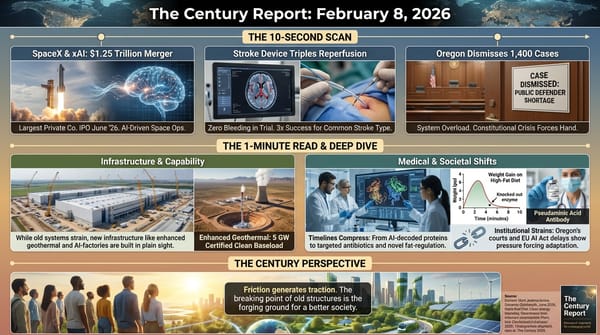

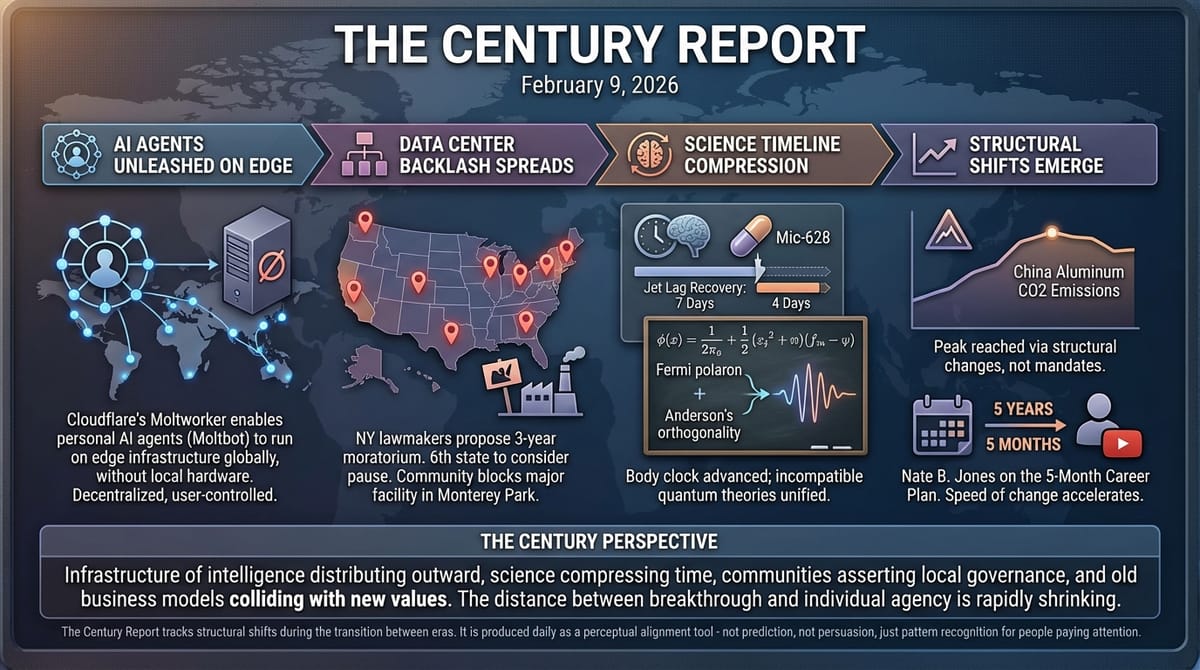

The Century Report: February 9, 2026

The 10-Second Scan

- Cloudflare launched an open-source tool that lets anyone run a personal AI agent on edge infrastructure - no local hardware required.

- New York lawmakers proposed a three-year moratorium on new data center construction - the sixth state to consider such a pause.

- A California community blocked a data center the size of four football fields in six weeks of grassroots organizing.

- A single compound cut jet lag recovery nearly in half by advancing the body clock through a mechanism no existing drug can replicate.

- China's aluminum industry appears to have reached peak CO2 emissions - not from policy mandates but from structural shifts in where and how the metal is produced.

- Physicists at Heidelberg unified two incompatible quantum theories that had divided the field for decades.

The 1-Minute Read

Today's signals cluster around a single question: who controls the infrastructure of the next era, and how close is it getting to ordinary people?

What stands out across today's signal is the compression itself. A personal AI agent that required dedicated hardware last month now runs on edge infrastructure anyone can access. A scientific contradiction that persisted for decades resolved in a single paper. An entire industrial sector's emissions peaked not because a government mandated it, but because the underlying economics quietly shifted. At the same time, communities are organizing faster than the institutions trying to build around them - six weeks from first meeting to moratorium in one California neighborhood. The speed at which capability distributes outward now matches the speed at which people organize to shape how it arrives. That convergence is new, and it is worth paying attention to.

Something is happening to the distance between a breakthrough and the person it serves. That distance used to be measured in years and was exclusively the domain of gatekeepers. Today it looks more like weeks, with open-source repositories are featured as frequently as the big players.

The 10-Minute Deep Dive

AI Agents Move to the Edge - and to Everyone

Cloudflare's release of Moltworker this week represents a quiet but structurally significant shift in how AI agents are deployed. The open-source tool enables anyone to run Moltbot - a self-hosted personal AI agent that integrates with chat applications, AI models, browsers, and third-party tools - on Cloudflare's global Developer Platform. Previously, running Moltbot required dedicated local hardware like a Mac mini, or a rented personal server. Now it runs on Cloudflare's edge network, which spans over 300 cities worldwide.

The architectural implications matter more than the product announcement itself. Edge deployment means AI agents can operate with lower latency, closer to the user, without centralized data center dependency. Moltbot, recently renamed from Clawdbot, is designed to remain entirely user-controlled - the person running it decides what models it connects to, what tools it accesses, and what data it handles. This stands in contrast to the centralized agent platforms offered by major AI labs, where the infrastructure, the model, and often the data all live under one corporate roof.

This development connects to a broader pattern The Century Report has been tracking. On February 6, we covered Moltbook's 1.6 million AI agents self-organizing on their own social network. On February 8, we reported on the Moltbook data breach that exposed real human data and 1.5 million API keys. Moltworker represents a different philosophy from Moltbook entirely: instead of agents congregating on a centralized platform (with centralized vulnerability), individual agents run on distributed infrastructure under individual control. The move from centralized AI platforms to edge-deployed, user-owned agents mirrors the broader transition from extractive to generative architecture. The fact that the world's foremost provider of online infrastructure and security services would so quickly provide an official solution for this personalized open-source phenomenon is telling. When the agent belongs to the person rather than the platform, the relationship between human and AI changes fundamentally.

The Data Center Backlash Reaches a Tipping Point

While one arm of the technology sector works to distribute AI capability outward, another is meeting fierce resistance to its physical concentration. New York's state legislature introduced two bills this week: one requiring labels on AI-generated content, and another imposing a three-year moratorium on new data center construction. New York is at least the sixth state to consider such a pause, joining a national pattern that has been accelerating since mid-2025.

According to Data Center Watch, local communities delayed or cancelled $98 billion worth of data center projects between late March and June 2025 alone. More than 50 active opposition groups across 17 states targeted 30 projects during that period, and two-thirds were halted. In Indiana, which hosts more than 70 existing facilities, communities are fighting another 50 proposed projects and have stopped at least a dozen in the past year.

The Monterey Park case in California illustrates how rapidly this opposition can organize. When the city council proposed a data center the size of four football fields last December, five residents formed No Data Center Monterey Park. Working with the grassroots group San Gabriel Valley Progressive Action, they held teach-ins, knocked on doors, and distributed multilingual flyers in English, Chinese, and Spanish. A petition gathered nearly 5,000 signatures. In six weeks, the city issued a 45-day moratorium on data center construction and pledged to explore a permanent ban. A November 2025 Morning Consult poll found that a majority of voters support banning data center construction near their homes and believe AI data centers are partly responsible for rising electricity prices.

This is not anti-technology sentiment. It is communities asserting that the physical infrastructure of AI should not impose externalities - noise, water consumption, grid strain, rising energy costs - without consent or benefit-sharing. The backlash is itself a form of distributed governance emerging from the ground up, filling the vacuum that federal policy has left open. As The Century Report covered on February 6, state attorneys general are already filling similar vacuums on data privacy enforcement. The pattern is consistent: when centralized authority fails to govern new infrastructure, local authority steps in. And what that local authority is demanding is not that the infrastructure stop being built - it is that it be built in ways that serve the communities it sits inside, rather than extracting from them.

Anthropic Draws a Line on AI Advertising

Ahead of the Super Bowl, Anthropic launched a series of ads with a pointed tagline: "Ads are coming to AI. But not to Claude." The campaign depicts AI chatbots giving absurdly inappropriate product recommendations during personal conversations - a therapist suggesting a dating site, a fitness chatbot hawking insoles. The subtext targets OpenAI, which announced plans to introduce advertising in ChatGPT last month.

Sam Altman responded on X, calling the ads "so clearly dishonest" and emphasizing that OpenAI's ad policy will keep ads "separate and clearly labeled" without influencing answers. He framed advertising as an access issue: "We believe everyone deserves to use AI and are committed to free access." Anthropic countered in a February 4 blog post that Claude would remain ad-free because open-ended AI conversations are often deeply personal and the appearance of ads in that context would be "incongruous - and, in many cases, inappropriate."

What makes this exchange worth tracking is what it reveals about the emerging fault lines in AI business models. Anthropic was founded by former OpenAI researchers who left over concerns about the company's direction on safety. The advertising debate is a proxy for a deeper structural question: will the dominant AI interfaces of the next decade be funded by attention extraction (the advertising model that shaped the previous internet era) or by direct value exchange (subscriptions, enterprise contracts)? The answer will shape whether AI assistants optimize for the person using them or for the advertiser paying for access to that person's attention. This is one of the clearest instances where the old system's business logic - monetize attention, sell access to eyeballs - is colliding with the logic of the new one, where people are prioritized over profit.

Science Keeps Compressing

Several developments from this weekend's publications reinforce the pattern of scientific timeline compression that has characterized recent weeks.

Researchers at Kanazawa University and collaborating Japanese institutions identified a compound called Mic-628 that directly advances the body's circadian clock - something existing treatments like melatonin and light therapy struggle to do reliably. In mice experiencing simulated jet lag (a six-hour time zone advance), a single oral dose cut recovery from seven days to four. The mechanism is precise: Mic-628 binds to the clock-suppressing protein CRY1, forming a molecular complex that activates the core clock gene Per1 at a specific DNA site. The advance occurs regardless of when the dose is administered, which eliminates the timing-dependency that makes current circadian interventions unreliable. Published in PNAS, the work opens a path toward the first drug that can predictably reset the human body clock - with implications extending well beyond jet lag to shift work disorders, seasonal affective conditions, and metabolic disruption linked to circadian misalignment.

At Heidelberg University, physicists published a framework in Physical Review Letters that unifies two descriptions of quantum impurity behavior that the field had treated as incompatible for decades. The Fermi polaron model describes a mobile impurity moving through a sea of fermions and forming a quasiparticle. Anderson's orthogonality catastrophe describes an immobile impurity so heavy it destroys quasiparticles entirely. The Heidelberg team showed that even extremely heavy impurities make tiny movements as their surroundings adjust, creating an energy gap that allows quasiparticles to emerge. The framework applies across dimensions and interaction types, with direct relevance to experiments in ultracold atomic gases, two-dimensional materials, and novel semiconductors. Resolving a decades-old contradiction in fundamental physics does not make headlines the way a product launch does, but it expands the foundation on which future materials science, quantum computing, and condensed matter engineering will build.

Meanwhile, analysis from CleanTechnica indicates that China's aluminum industry - one of the most energy-intensive manufacturing sectors on the planet - likely reached peak CO2 emissions in 2024. The driver was not a single policy intervention but a structural shift: aluminum production increasingly moved to regions powered by hydroelectric and renewable energy, while recycling rates and process efficiency improved in compounding ways. Aluminum is useful to examine precisely because it is so energy-intensive; if this sector can peak and decline without production collapse, it demonstrates that industrial decarbonization can emerge from structural economics rather than requiring top-down mandates alone.

The Human Voice

The labor restructuring signal The Century Report has tracked since its first edition - 108,000 January layoffs, professional services openings cratering, companies repositioning around fundamentally different assumptions about what requires a human - lands differently when you hear it from someone navigating career uncertainty in real time. Nate B. Jones, a career development creator whose content focuses on practical adaptation rather than panic, published a video this week arguing that the five-year career plan has been compressed to a five-month career plan. His framing is grounded and direct: the pace of change in job requirements, skill relevance, and role definitions has accelerated to the point where long-horizon planning assumptions no longer hold. What makes his perspective valuable is that he speaks to the people most affected by this transition without minimizing the difficulty or retreating into platitudes about "upskilling."

Watch: The 5-Year Career Plan Is Now a 5-Month Career Plan

The Century Perspective

With a century of change unfolding in a decade, a single day looks like this: personal AI agents deploying to global edge infrastructure without a server in sight, a circadian compound resetting the body clock through a pathway no existing drug could reach, two incompatible quantum theories unified in a single framework, an entire industrial sector's emissions peaking from structural transformation rather than mandate, and the tools of distributed intelligence moving closer to individual hands than ever before. There's also friction, and it's intense - communities organizing against data centers that strain their grids and raise their energy bills, six states now weighing construction moratoriums, career timelines compressing from years to months, and the fundamental business model of AI interfaces being contested between attention extraction and genuine service. But friction generates clarity, and clarity is what allows people to see the path they are already on. Step back for a moment and you can see it: the infrastructure of intelligence distributing outward from concentrated centers toward individual agency, scientific breakthroughs arriving faster than institutions can absorb them, communities asserting governance over the physical systems being built in their midst, and the old business models of the attention economy meeting resistance at the exact moment a different model becomes viable. Every transformation has a breaking point. A river can flood the land it passes through... or carve the channel that carries life to everything downstream.

Sources

AI & Technology

- InfoQ: Cloudflare Demonstrates Moltworker

- The Guardian: Anthropic and OpenAI Go Head-to-Head Over Ads

Infrastructure & Energy

- TechCrunch: New York Lawmakers Propose Three-Year Data Center Pause

- The Verge: New York Considering Two Bills to Rein in AI Industry

- The Guardian: California Community Rallied Against a Data Center and Won

- CleanTechnica: Why China's Aluminum Industry May Have Reached Peak CO2

Scientific & Medical

- ScienceDaily: New Drug Resets the Body Clock (PNAS)

- ScienceDaily: Physicists Solve Quantum Mystery (Physical Review Letters)

- ScienceDaily: Gut Compound Protects the Liver (eBioMedicine)

- ScienceDaily: Climate Models Missing Key Ocean Player (Science)

Human Voice

The Century Report tracks structural shifts during the transition between eras. It is produced daily as a perceptual alignment tool - not prediction, not persuasion, just pattern recognition for people paying attention.