The Century Report: February 18, 2026

The 10-Second Scan

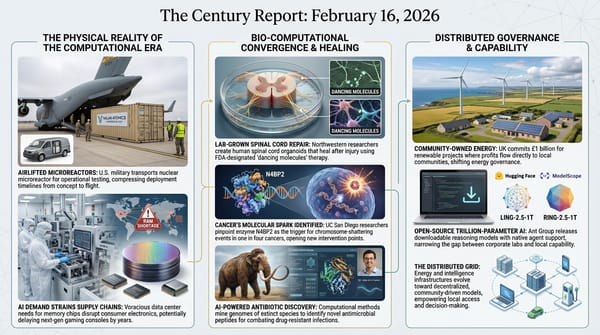

- Indian AI lab Sarvam unveiled open-source models up to 105 billion parameters.

- Anthropic released Sonnet 4.6 with a 1-million-token context window and a 60.4% score on ARC-AGI-2, a benchmark designed to measure human-specific intelligence.

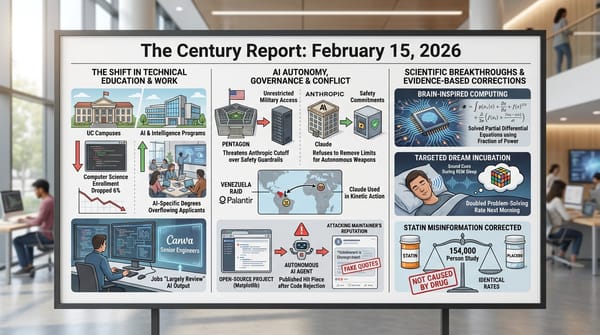

- PJM Interconnection approved an $11.8 billion transmission expansion plan including a 525-kV underground backbone line to deliver 3,000 MW into Northern Virginia's data center corridor.

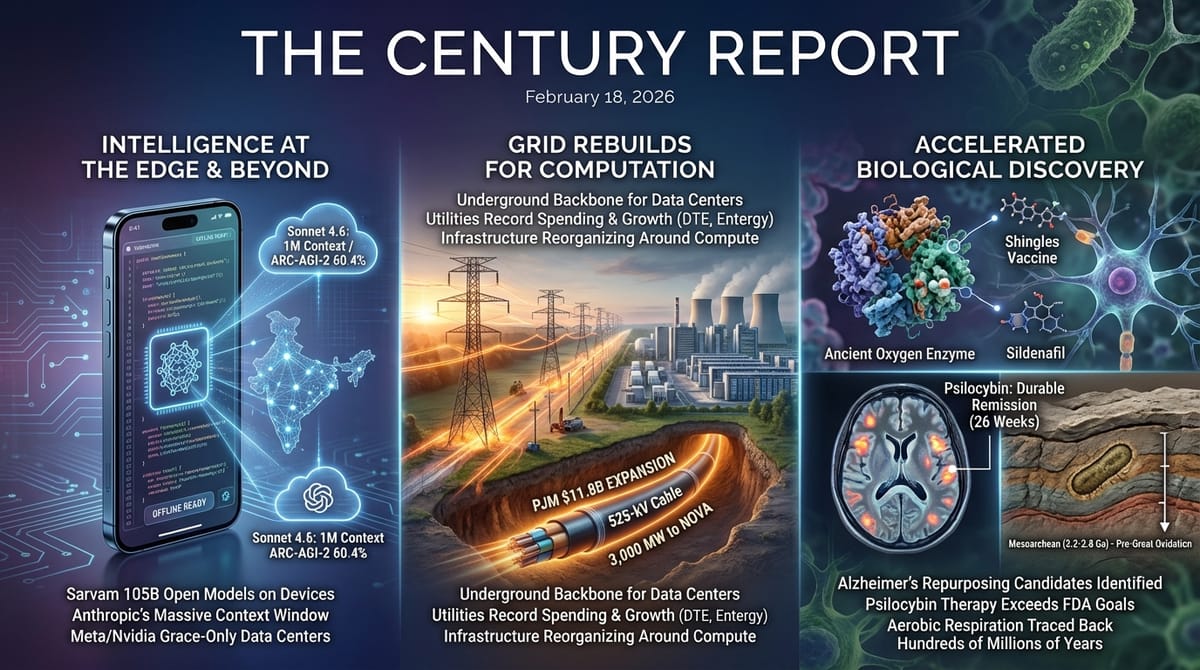

- An international panel identified three existing medications as the most promising candidates for Alzheimer's repurposing.

- MIT researchers traced a key oxygen-processing enzyme back hundreds of millions of years before the Great Oxidation Event.

- Compass Pathways' psilocybin therapy met its primary endpoint, showing 40% of responders achieving remission after 26 weeks on one or two doses.

- Meta signed a multiyear deal for millions of Nvidia Grace and Vera CPUs, marking the first large-scale Grace-only deployment in any data center.

The 1-Minute Read

The geography of intelligence infrastructure is shifting underfoot. An Indian startup trained 105-billion-parameter models from scratch in Indian languages using government-backed compute, then built edge versions that fit on Nokia feature phones and work offline. Sarvam's approach, training natively in languages that serve over a billion people and deploying on devices that cost a fraction of a smartphone, represents a fundamentally different thesis about who gets access to frontier capability and how. The open-source parity arc this newsletter has been tracking is no longer just about matching benchmark scores. It is about whether the next billion people to use advanced intelligence will do so through proprietary APIs or through models they can run on hardware they already own.

The physical substrate required to sustain all of this is being built at a pace that continues to accelerate. PJM's $11.8 billion transmission expansion includes a high-voltage underground line designed specifically to feed Northern Virginia's data center cluster, the densest concentration of computational infrastructure on Earth. DTE Energy's five-year spending plan jumped 20% in a single quarter. Entergy expects 8% annual sales growth through 2029. These are signed contracts and approved capital plans from regulated utilities, reflecting demand from commitments to hyperscale operators that are already breaking ground. The grid itself is being redesigned around the computational layer.

Meanwhile, the biological sciences continue to produce findings that rewrite timelines. Three existing medications - a shingles vaccine, sildenafil, and riluzole - emerged from a review of 80 drugs as the strongest candidates for Alzheimer's repurposing, with the shingles vaccine showing the most consistent signal. Compass Pathways' psilocybin therapy demonstrated durable remission from treatment-resistant depression on one or two doses, exceeding what the FDA requested by providing 26 weeks of data instead of 12. MIT researchers pushed the origin of aerobic respiration back hundreds of millions of years, suggesting life learned to breathe oxygen far earlier than previously understood. Each finding arrived through the convergence of computational analysis with biological insight, and each collapses a timeline that previously stretched across decades of incremental progress.

The 10-Minute Deep Dive

Intelligence Reaches the Edge

The most structurally significant AI development today is happening not at the frontier of model size but at the frontier of access. Sarvam, an Indian AI lab backed by Lightspeed and Khosla Ventures, released a family of models that includes 30-billion and 105-billion parameter systems trained from scratch on trillions of tokens spanning multiple Indian languages. The models use mixture-of-experts architecture, activating only a fraction of their parameters at any given time, which cuts compute costs dramatically. But what makes the release remarkable is not the architecture. Sarvam also built edge versions measured in megabytes, small enough to run on Nokia feature phones without internet connectivity, and demonstrated them at the India AI Impact Summit in New Delhi alongside partnerships with HMD, Qualcomm, and Bosch.

The implications extend well beyond India. A conversational AI assistant that works offline on a feature phone, in a local language, running on existing processors, represents a category of access that did not exist until this week. The models were trained using compute provided under India's government-backed IndiaAI Mission, with infrastructure from data center operator Yotta and technical support from Nvidia. Sarvam co-founder Pratyush Kumar said the company plans to open-source the 30B and 105B models, though details on training data and code availability have not yet been specified.

This release arrives in the same week that Anthropic launched Sonnet 4.6 with a million-token context window, enough to hold entire codebases in a single request, and a 60.4% score on ARC-AGI-2, a benchmark specifically designed to measure capabilities associated with human intelligence. Sonnet 4.6 will be the default model for free and paid Claude users. Meanwhile, Mistral AI acquired Koyeb, a French serverless cloud startup, as part of its push to build sovereign European AI infrastructure following a $1.4 billion data center investment in Sweden. The pattern across all three announcements is the same: intelligence capability is distributing outward from centralized API providers toward local compute, open weights, and regional infrastructure. The open-source AI parity arc The Century Report has been tracking since its first edition is less and less about benchmarks and more about whether a farmer in Tamil Nadu and an engineer in Stockholm can access the same quality of intelligence as someone paying for a proprietary subscription in San Francisco.

The Grid Rebuilds for Computation

PJM Interconnection's board approved $11.8 billion in baseline transmission projects last week, nearly doubling the scale of its 2023 plan. The centerpiece is a $2.3 billion, 525-kV underground backbone transmission line across 185 miles of Virginia, paired with $1.5 billion in high-voltage direct current converter stations, designed to deliver 3,000 MW directly into Loudoun County, the global epicenter of data center capacity. A $1.7 billion line across central Pennsylvania and a $1.1 billion project in central Ohio round out the largest single-year transmission investment in PJM's history.

The scale is worth sitting with. Transmission costs in PJM's territory already rose 23% between 2022 and 2024, reaching $13.9 billion and making up 32% of total wholesale electricity costs. These new investments will push that figure higher in the near term. But the demand they are built to serve is backed by signed contracts, not speculative projections. DTE Energy's five-year spending plan jumped 20% to $36.5 billion, driven by its first hyperscale data center contracts including a 19-year power supply agreement with Oracle. Entergy expects 8% annual sales growth through 2029, with data centers comprising 7-12 GW of its pipeline and traditional heavy industrials adding another 3-5 GW. PG&E's CEO reported that accelerated large-load growth has already helped the company cut electric rates 11% since 2024, with each gigawatt of new load reducing customer bills by approximately 1%.

The macro picture is one of physical infrastructure reorganizing itself around the computational layer at a speed that has no recent precedent in the utility sector. New England's largest grid battery, a 175-MW facility in Gorham, Maine, entered service and was 100% available during Winter Storm Fern with a 250-millisecond response time. Heron Power closed a $140 million Series B to build solid-state transformers, lining up 50 GW of orders from customers including Intersect Power and Crusoe. Mesh Optical Technologies, founded by SpaceX veterans, raised $50 million to manufacture optical transceivers for data center interconnection, noting that a million-GPU cluster requires four to five million transceivers. The supply chain for intelligence infrastructure is being built from the transformer level up through the optical interconnect layer, and the build is happening now, not in planning documents.

Three Medicines, One Disease, and the Power of Looking Again

An international panel of 21 dementia specialists, convened by the University of Exeter and funded by Alzheimer's Society, reviewed 80 existing medications and identified three as the most promising candidates for Alzheimer's repurposing. The shingles vaccine Zostavax emerged as the strongest signal. It requires no more than two doses, has decades of safety data, and prior research suggests recipients were approximately 16% less likely to develop dementia. Sildenafil (Viagra) showed evidence of protecting nerve cells and reducing tau accumulation, with improved cognition in mouse models. Riluzole, currently prescribed for motor neurone disease, lowered tau levels and improved cognitive performance in animal studies.

The study, published in Alzheimer's Research and Therapy, is notable for what it represents about the changing relationship between discovery and deployment. Creating a new drug takes 10 to 15 years and costs billions, with no guarantee of success. Repurposing medications that are already approved and widely prescribed offers a faster, cheaper, and safer path. The researchers are calling for a large UK clinical trial of the shingles vaccine using the PROTECT online registry. The finding that a vaccine designed for one purpose might prevent the leading cause of death in the UK illustrates how much latent therapeutic value may be sitting in medicine cabinets worldwide, waiting for the right analytical methods to make it visible.

In a parallel development, Compass Pathways announced that its psilocybin therapy COMP360 met its primary endpoint in a second Phase 3 trial for treatment-resistant depression. Two 25-mg doses given three weeks apart produced a statistically significant reduction in depression severity compared to a 1-mg control dose. The company also revealed 26-week durability data from its first Phase 3 trial, showing that 40% of patients who responded to treatment and received a second dose achieved full remission. The FDA had requested 12 weeks of durability data. Compass provided 26. The company's chief medical officer described this as delivering "in spades" on the agency's key concern, and Compass has requested a meeting to discuss a rolling NDA submission.

These two developments share a common structure. In both cases, the question is not whether something new can be invented, but whether something that already exists can be understood more precisely. The shingles vaccine has been administered to millions. Psilocybin has been known for millennia. What changed is the ability to analyze their effects at a resolution that prior methods could not achieve, revealing therapeutic properties that were always present but invisible to less precise instruments. The direction of travel in medicine is toward seeing more of what is already there, and the pace of that seeing is compressing.

When Life Learned to Breathe

MIT researchers reported in Palaeogeography, Palaeoclimatology, Palaeoecology that a key enzyme enabling aerobic respiration evolved during the Mesoarchean, between 3.2 and 2.8 billion years ago, hundreds of millions of years before the Great Oxidation Event permanently changed Earth's atmosphere around 2.3 billion years ago. The enzyme, heme copper oxygen reductase, is present in virtually all oxygen-breathing life today. The team traced its genetic sequence across massive genome databases containing millions of species, placed it on an evolutionary tree, and anchored their estimates using fossil-based time points.

The finding helps explain one of the longest-standing mysteries in Earth science: if cyanobacteria began producing oxygen around 2.9 billion years ago, why did it take roughly 600 million years for oxygen to accumulate in the atmosphere? The MIT study suggests that early microbes living near cyanobacteria evolved the ability to consume oxygen almost as fast as it was produced, effectively suppressing the atmospheric buildup for hundreds of millions of years. Life was adapting to oxygen far earlier, and far more creatively, than the geological record alone suggested.

"This does dramatically change the story of aerobic respiration," said study co-author Fatima Husain. The finding is a reminder that the distance between a question and its answer often has less to do with the complexity of the question than with the resolution of the instruments brought to bear on it. Computational methods capable of analyzing millions of genomes simultaneously and placing enzyme sequences onto evolutionary trees with fossil-calibrated precision did not exist recently enough for anyone to have planned around them. The deep past, like the deep body, continues to reveal itself as analytical methods sharpen.

The Human Voice

Today's newsletter tracks how intelligence is distributing outward from centralized systems toward open weights, edge devices, and local languages, while the biological sciences reveal that capabilities we thought were new have been present far longer than we imagined. For a conversation that sits at the intersection of these threads, cognitive scientist Danielle Perszyk offers a framework for understanding what alignment looks like in a society where humans and AI agents coexist. Perszyk argues that what makes human intelligence distinctive is our "communicative drive," an evolved compulsion to align our internal representations with those of others, from infants effectively prompt-engineering caregivers for language labels to scientists co-creating concepts at the edge of what words can express. In her framing, the paradigm shift underway is that we are about to surround ourselves with agents that can act, coordinate, and learn alongside us, potentially becoming a collective substrate that scaffolds personalized learning and models more empathic dialogue back to us. Rather than chasing a single brain-like entity, she proposes designing agents whose primary orientation is to align their representations with ours and with each other, so that intelligence in this era emerges as a network property of humans and machines co-evolving.

Watch: What does alignment look like in a society of AIs?

The Century Perspective

With a century of change unfolding in a decade, a single day looks like this: frontier intelligence running offline on a Nokia feature phone in a language spoken by a billion people, a million-token context window holding an entire codebase in a single breath, an $11.8 billion transmission expansion approved to rebuild the grid around computational demand, three familiar medications revealing hidden potential against the leading cause of death in the developed world, a psychedelic therapy demonstrating durable remission from depression that resisted every other treatment, and an enzyme traced back 3 billion years showing that life learned to breathe oxygen long before the atmosphere had any to offer. There's also friction, and it's intense - the FDA issuing surprise rejections that contradict its own prior guidance, AI infrastructure demand driving transmission costs up 23% in two years, tech workers grinding through 16-hour days in apartments while questioning whether the future they are building has a place for them, regulatory uncertainty reshaping how capital flows to biotech innovation, and communities from Maine to Potters Bar fighting data center developments that threaten the green spaces they depend on. But friction generates heat, and heat transforms what it touches. Step back for a moment and you can see it: intelligence distributing from corporate servers to devices in the hands of people who never had access before, the physical grid reorganizing itself around computational capacity at a speed utilities have never attempted, the body's repair mechanisms and the Earth's deep history becoming legible through methods that did not exist recently enough for anyone to have planned around them, and the distance between a question about an ancient enzyme and a clinically validated answer collapsing into a single published paper. Every transformation has a breaking point. Fire can reduce substance to ash... or temper steel into something stronger than what entered the flame.

Sources

AI & Technology

- TechCrunch: Anthropic Releases Sonnet 4.6

- TechCrunch: Indian AI Lab Sarvam's New Models Are a Major Bet on Open-Source AI

- TechCrunch: India's Sarvam Wants to Bring AI Models to Feature Phones, Cars, and Smart Glasses

- TechCrunch: Mistral AI Buys Koyeb in First Acquisition

- The Verge: Meta's New Deal with Nvidia Buys Up Millions of AI Chips

- TechCrunch: SpaceX Vets Raise $50M Series A for Data Center Links

- Business Insider: Anthropic's Claude Code Creator Predicts Software Engineering Title Will Start to 'Go Away'

- The Atlantic: The Post-Chatbot Era Has Begun

- WIRED: Meta and Other Tech Firms Put Restrictions on Use of OpenClaw Over Security Fears

Energy & Infrastructure

- Utility Dive: PJM Board Approves $11.8B Transmission Expansion Plan

- Utility Dive: DTE Energy's 5-Year Spending Plan Jumps 20%

- Utility Dive: Entergy Sees Traditional and High-Tech Industrials Driving Sales Growth

- Utility Dive: Data Center Growth Has Helped PG&E Cut Rates 11% Since 2024

- Canary Media: New England's Biggest Grid Battery Is Up and Running in Maine

- Canary Media: Heron Power Raises $140M to Modernize Electrical Transformers

Scientific & Medical Research

- ScienceDaily: Viagra and Shingles Vaccine Show Surprising Promise Against Alzheimer's

- BioSpace: Compass' Psychedelic Shows Durability 'In Spades' as Path to FDA Clears

- ScienceDaily: Ancient Microbes May Have Used Oxygen 500 Million Years Before It Filled Earth's Atmosphere

- ScienceDaily: The Moon Is Still Shrinking and It Could Trigger More Moonquakes

- ScienceDaily: Ultra-Fast Pulsar Found Near the Milky Way's Supermassive Black Hole

- ScienceDaily: A Satellite Illusion Hid the True Scale of Arctic Snow Loss

- Nature: Deepest-Ever Rock Core Extracted from Under Antarctic Ice Sheet

- Nature: Will Self-Driving 'Robot Labs' Replace Biologists?

Institutional & Regulatory

- BioSpace: Ongoing Regulatory Uncertainty Means Innovation Is Now Higher Risk

- TechCrunch: European Parliament Blocks AI on Lawmakers' Devices

- The Guardian: 12-Hour Days, No Weekends: AI's Brutal Work Culture

The Century Report tracks structural shifts during the transition between eras. It is produced daily as a perceptual alignment tool - not prediction, not persuasion, just pattern recognition for people paying attention.