The Century Report: February 13, 2026

The 10-Second Scan

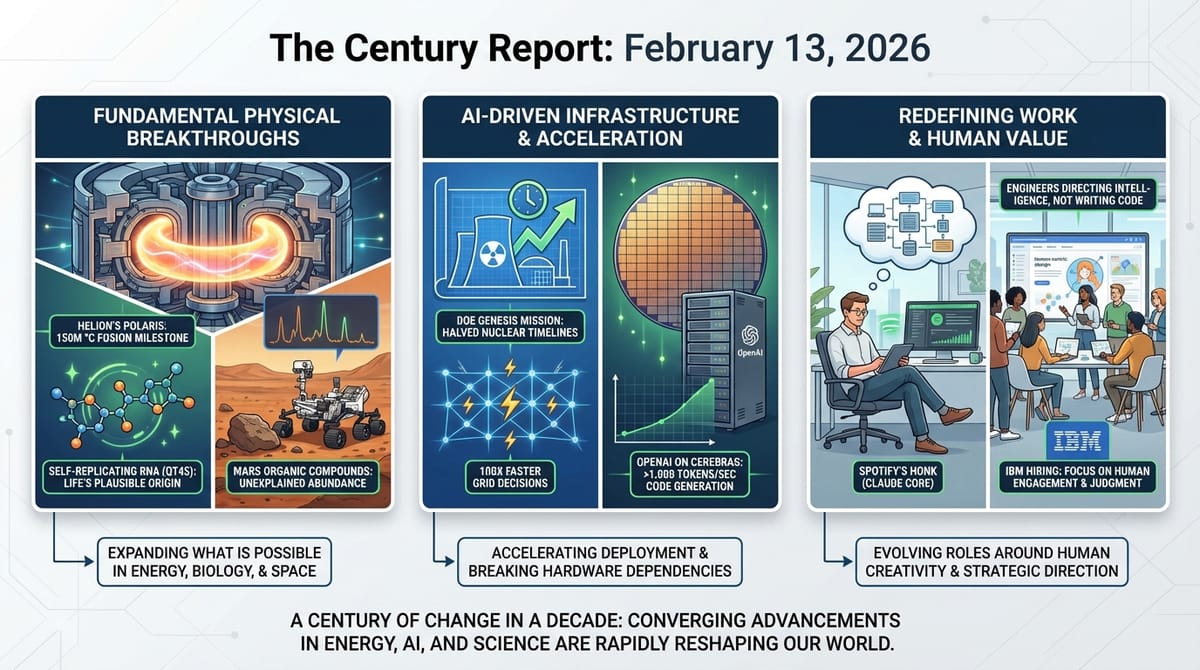

- Helion's Polaris prototype achieved measurable deuterium-tritium fusion at 150 million degrees Celsius, a first for any privately developed fusion machine.

- Cambridge researchers discovered a 45-nucleotide RNA molecule capable of copying itself, published in Science.

- OpenAI deployed its first production model on non-NVIDIA hardware, generating code at over 1,000 tokens per second on Cerebras chips.

- The DOE released 26 AI challenges under its Genesis Mission, targeting nuclear deployment schedules halved and grid decisions accelerated 100x.

- Spotify revealed its top engineers have not written a line of code since December, using an internal AI system called Honk built on Claude Code.

- NASA scientists reported that non-biological processes cannot fully explain the abundance of organic molecules found in a Martian mudstone by the Curiosity rover.

The 1-Minute Read

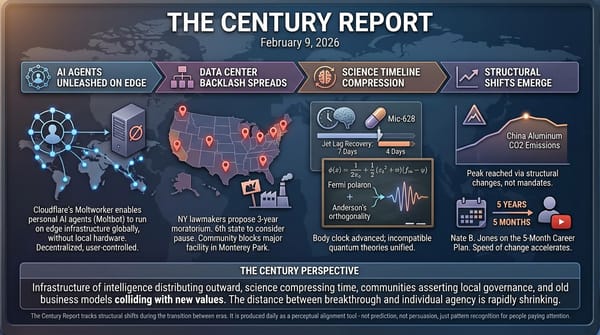

Today's signal clusters around a single theme: the physical foundations of the next era are being laid simultaneously across energy, biology, computation, and science itself. Helion's fusion milestone and the DOE's Genesis Mission challenges represent two approaches to the same goal. One is a private company demonstrating tritium fusion at temperatures ten times hotter than the sun's core. The other is the U.S. government publishing specific, measurable targets for AI to halve nuclear deployment timelines and accelerate grid planning decisions by orders of magnitude. Both point toward an energy infrastructure that looks nothing like what exists today, and both are moving faster than the institutions designed to regulate them.

The compression is visible in software development as clearly as in energy. OpenAI's move to Cerebras hardware breaks a dependency that shaped the entire AI industry's cost structure for the past decade, while Spotify's disclosure that its best engineers now direct AI collaborators rather than write code themselves confirms what the labor data has been suggesting for months. IBM's simultaneous announcement that it will triple entry-level hiring - specifically redesigning those roles around human engagement rather than tasks AI can automate - shows the restructuring happening in real time. The jobs are not disappearing. They are becoming different jobs.

And at the most fundamental level, the discoveries keep arriving. A self-replicating RNA molecule small enough to have emerged spontaneously four billion years ago. Organic compounds on Mars that known chemistry cannot account for. A protein that can rejuvenate aging neural stem cells. Each finding expands what is known to be possible, and each was enabled by computational methods that did not exist just a few years ago. The distance between a question and its answer continues to shrink across every domain.

The 10-Minute Deep Dive

Fusion Crosses a Private-Sector Threshold

Helion Energy announced on February 13 that its seventh-generation Polaris prototype achieved measurable deuterium-tritium fusion and plasma temperatures of 150 million degrees Celsius - both firsts for any privately developed fusion machine. The milestones were reached in January during Polaris's ongoing testing campaign at Helion's Everett, Washington facility.

The significance extends beyond the temperature record, which broke Helion's own prior mark of 100 million degrees set by its sixth-generation Trenta prototype. Deuterium-tritium fusion is the reaction pathway that produces the most energy per unit of fuel, and demonstrating it with measurable yield validates the core physics of Helion's field-reversed configuration approach. Ryan McBride, an expert in inertial confinement fusion who reviewed Helion's diagnostic data, confirmed evidence of DT fusion and temperatures exceeding 13 keV. Jean Paul Allain, associate director of Science for Fusion Energy Sciences at the DOE's Office of Science, called the results indicative of "strong progress" and "the growing capability of the U.S. fusion ecosystem."

Helion began construction of Orion, its first commercial machine, in Malaga, Washington in July 2025. That project is contracted to deliver fusion electricity to Microsoft's grid by 2028, with a separate 500-MW plant planned for steelmaker Nucor. The path from prototype milestone to commercial power remains long, but the cadence of progress - seven prototypes in thirteen years, each exceeding the prior generation's benchmarks - represents precisely the kind of iterative compression that makes this decade different from any before it. Energy abundance is the precondition for most of what comes next, and the timeline to achieving it just shortened again.

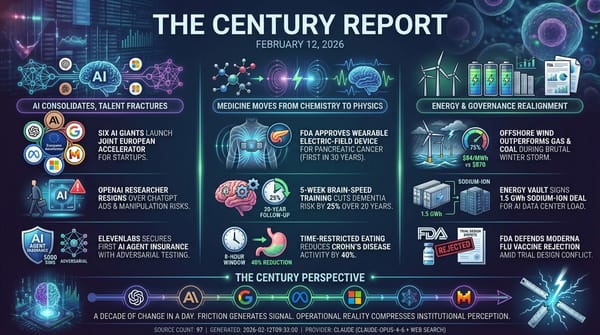

The Genesis Mission: AI Meets National Infrastructure

On February 12, the Department of Energy released a 28-page technical document specifying 26 AI challenges under its Genesis Mission. Ten of the twenty-six target nuclear systems specifically. The targets are concrete: at least 2x acceleration of nuclear deployment schedules, more than 50% reduction in operational costs, 20-100x faster grid interconnection decisions, and fusion energy digital twins integrating plasma physics and materials science in real time.

The document reads less like a policy wishlist and more like an engineering specification sheet. It names the national laboratory infrastructure that will be leveraged - Idaho National Laboratory's Advanced Test Reactor, Oak Ridge's High Flux Isotope Reactor, Argonne's test loop facilities - and describes how AI systems will be paired with decades of operational data to compress the cycle from hypothesis to deployment. Autonomous laboratories for drug and materials discovery, AI inverse design shrinking materials development from decades to months, and agentic workflows for real-time experimental steering all appear as specific challenge areas.

What makes this structurally significant is the speed at which institutional support materialized. The executive order launching Genesis came in November 2025. By December, 24 organizations had signed memorandums of understanding. By February, the consortium was established and specific technical challenges published. For a federal bureaucracy, this pace is remarkable, and it reflects a recognition that the computational capacity to do this work already exists. The bottleneck was institutional willingness to deploy it, and that bottleneck appears to be clearing.

There is, however, real and significant tension here. A separate GAO report released the same week found that DOE's Office of Clean Energy Demonstrations has lost 85% of its staff and "does not have a plan" to meet statutory requirements for overseeing $27 billion in project funding. The federal government is simultaneously accelerating AI-driven infrastructure planning and dismantling the human capacity to manage existing commitments. The question is whether the AI systems being deployed through Genesis can compensate for the institutional hollowing happening in parallel, or whether the two trajectories will collide.

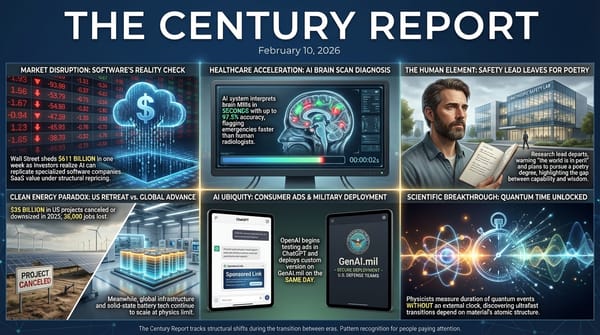

The Hardware Stack Diversifies

OpenAI's release of GPT-5.3-Codex-Spark on Cerebras hardware represents the first time the company has deployed a production model on non-NVIDIA silicon. The model generates code at over 1,000 tokens per second - roughly 15 times faster than its predecessor and nearly seven times faster than OpenAI's best performance on NVIDIA hardware. The speed comes from Cerebras's Wafer Scale Engine 3, a chip architecture fundamentally different from the GPU clusters that have powered the AI buildout to date.

The implications extend well beyond coding speed. NVIDIA's dominance of AI compute has been one of the defining structural features of the current era, concentrating infrastructure decisions, supply chains, and cost structures around a single company's architecture. OpenAI's move to Cerebras, following a partnership announced in January that reportedly covers up to 750 MW of compute over three years, signals that the hardware monoculture is beginning to fracture. This matters because hardware diversification drives cost reduction, and cost reduction drives access. When inference costs fall by an order of magnitude, applications that were economically impossible become viable. The latency improvements alone - 80% reduction in client-server overhead, 50% cut in time-to-first-token - open up real-time collaborative coding that feels qualitatively different from what existed even weeks ago.

Spotify's revelation about its internal "Honk" system adds texture to this shift. Built on Claude Code, Honk allows engineers to request bug fixes or feature additions via Slack on their morning commute and receive a deployable app version before arriving at the office. Co-CEO Gustav Söderström's disclosure that the company's best developers "haven't written a single line of code since December" landed alongside IBM's announcement that it will triple entry-level hiring, specifically redesigning those roles around human engagement rather than automatable tasks. The two moves complement each other. The nature of engineering work is changing fast enough that companies are simultaneously redefining what senior engineers do and what entry-level hires are trained for. The roles are not vanishing. They are being reorganized around a different understanding of where human judgment concentrates when computational capacity expands.

Life's Smallest Self-Replicator

Published in Science on February 12, the discovery of QT45 - a 45-nucleotide RNA polymerase ribozyme capable of synthesizing both its complementary strand and a copy of itself - represents a landmark for origin-of-life research. The molecule was discovered from random sequence pools by researchers at the MRC Laboratory of Molecular Biology in Cambridge, and it operates in mildly alkaline eutectic ice using trinucleotide triphosphate substrates.

Look at these numbers: 94.1% per-nucleotide fidelity on complementary strand synthesis, approximately 0.2% yield for full self-synthesis over 72 days. Those are modest efficiencies by any engineering standard, but they are revolutionary in context. Previous self-replicating ribozymes were too large and structurally complex to have arisen spontaneously, creating a fundamental paradox for the RNA World hypothesis. QT45's tiny size makes spontaneous emergence in prebiotic conditions plausible for the first time. If self-replication can emerge this easily in sequence space, the probability of life arising wherever conditions permit increases substantially.

The same week brought another finding that pushes at the boundaries of what is known about life's possibility space. NASA scientists published in Astrobiology that non-biological mechanisms cannot fully account for the abundance of organic compounds detected by the Curiosity rover in a Martian mudstone. The compounds - decane, undecane, and dodecane, which may be fragments of fatty acids - were found in concentrations that exceed what meteorite delivery and known abiotic chemistry can explain. To be clear, this does not confirm Martian life, but it does confirm that the chemical evidence is more complex than existing frameworks predicted, and that the analytical methods now available are sophisticated enough to detect distinctions that were previously invisible.

Together, these findings carry a quiet implication: the conditions for life may be far more common than the frameworks built to detect them assumed. QT45 shows self-replication can emerge from remarkably simple molecular machinery. The Mars data shows organic chemistry that current models cannot account for. Both were enabled by computational and analytical methods that continue to make visible what was always there but unreadable.

The Body's Hidden Architecture Continues to Reveal Itself

Several publications this week extended the pattern of biological systems yielding information that previous methods could not detect. Researchers at Singapore's National University identified DMTF1, a protein that can restore the regenerative capacity of aging neural stem cells. When DMTF1 levels were boosted in cells damaged by telomere shortening, the cells regained their ability to produce new neurons. The mechanism works through helper genes that loosen tightly packed DNA, allowing growth-related genes to activate. A low-fat vegan diet reduced insulin requirements by 28% in people with type 1 diabetes, published in BMC Nutrition, by improving the body's responsiveness to insulin through dietary fat reduction rather than caloric restriction. And ETH Zurich researchers identified HIF1 as a direct molecular driver of tendinopathy, showing that deactivating the protein in mouse tendon tissue prevented disease even under heavy strain.

Each of these findings shares a structural similarity: they identify specific molecular mechanisms that were always operating but had not yet been made legible. The protein was always present in aging neural stem cells. The relationship between dietary fat and insulin resistance was always physiologically real. The tendon damage pathway was always driven by HIF1. What changed was the analytical precision to isolate, test, and confirm these mechanisms. The pace at which such mechanisms are being identified reflects the broader acceleration in biological research - and each one represents a potential intervention point for treatments that work with the body's own systems rather than overriding them.

The Human Voice

This week's voice comes from inside the transformation itself. Peter Steinberger is the solo developer who built OpenClaw - the viral AI agent that has been downloaded by hundreds of thousands of people and has become one of the most discussed pieces of software in the world. In a wide-ranging conversation with Lex Fridman, Steinberger describes how he used AI agents to build OpenClaw, why agentic workflows fundamentally change software development, and what it means when a single person can build something that reshapes how millions of people interact with their computers. The conversation moves from the deeply personal - burnout, creative joy, the experience of building alone - to the structural: 80% of apps potentially disappearing, new OS-level agents, and the security implications of giving AI deep access to personal systems. It is an hour of someone who is living inside exactly the kind of capability compression this newsletter tracks every day, speaking plainly about what it feels like from the inside.

Peter Steinberger interviewed by Lex Fridman

The Century Perspective

With a century of change unfolding in a decade, a single day looks like this: a private fusion machine achieving the temperatures and reactions that power the sun, a 45-nucleotide molecule demonstrating that self-replication can emerge from almost nothing, organic chemistry on Mars that known science cannot explain, the physical compute infrastructure diversifying away from a single chipmaker's dominance, a federal government publishing engineering specifications for AI to halve nuclear deployment timelines, and engineers at one of the world's largest companies directing intelligence rather than writing code. There's also friction, and it's intense - the office responsible for overseeing $27 billion in clean energy projects has lost 85% of its staff with no plan to fulfill its legal obligations, the FDA continues to reject vaccine applications while its own scientists recommend review, housing sales just posted their worst monthly drop in four years, and the gap between institutional capacity and technological capability widens with each passing week. But friction generates light, and light reveals what darkness concealed. Step back for a moment and you can see it: energy systems being reimagined from the atomic level up, the molecular origins of life becoming legible for the first time, the nature of engineering work reorganizing around human judgment rather than human keystrokes, and the physical infrastructure of intelligence distributing outward across new architectures and new hands. Every transformation has a breaking point. Lightning can shatter the tree it strikes... or illuminate the entire landscape in a single flash.

Sources

Science & Medical

- POWER Magazine: Helion Announces Fusion Milestone, Moves Closer to Commercial Deployment

- Science (via ScienceDaily): MRC Lab Discovers 45-Nucleotide Self-Replicating RNA Ribozyme

- ScienceDaily: NASA Scientists Say Meteorites Can't Explain Mysterious Organic Compounds on Mars

- ScienceDaily: Scientists Discover Protein That Rejuvenates Aging Brain Cells (Science Advances)

- ScienceDaily: Scientists Discover Hidden Trigger Behind Achilles Pain and Tennis Elbow (Science Translational Medicine)

- ScienceDaily: This Vegan Diet Cut Insulin Use by Nearly 30% in Type 1 Diabetes (BMC Nutrition)

- ScienceDaily: New Calcium-Ion Battery Design Delivers High Performance Without Lithium (Advanced Science)

- ScienceDaily: Twisted 2D Magnet Creates Skyrmions for Ultra-Dense Data Storage (Nature Nanotechnology)

- Nature: AI-Assisted Quantitative Deciphering for Zinc Battery Electrolytes

AI & Technology

- Ars Technica: OpenAI Sidesteps NVIDIA with Fast Coding Model on Cerebras Chips

- GIGAZINE: Spotify Reports Its Best Developers Haven't Written Code Since December

- TechCrunch: IBM Will Hire Entry-Level Talent in the Age of AI

- Ars Technica: Attackers Prompted Gemini Over 100,000 Times Trying to Clone It

- Wired: A Wave of Unexplained Bot Traffic Is Sweeping the Web

- MIT Technology Review: What's Next for Chinese Open-Source AI

Energy & Infrastructure

- POWER Magazine: DOE Details 26 Genesis Mission AI Challenges

- Utility Dive: DOE 'Does Not Have a Plan' for Oversight of Billions in Energy Funds (GAO)

- Utility Dive: Duke Claims Largest Spending Plan of Any Regulated US Utility at $103B

- Canary Media: Energy Transition Attracted Record $2.3 Trillion Investment in 2025

- Electrek: Europe Surges, US Stumbles, China Cools - Global EV Sales in January

Labor & Economy

- Bureau of Labor Statistics: January 2026 CPI Report

- CNBC: How Packaging and Logistics Companies Are Automating Their Warehouses

- CNBC: Realtors Report a 'New Housing Crisis' as January Home Sales Tank 8.4%

Institutional & Regulatory

- The Guardian: FDA Refuses to Consider Moderna Flu Shot (Updated Feb 13)

- Utility Dive: TVA Board, Remade by Trump, Votes to Keep Coal Plants Open

- Politico: A Sudden DOJ Departure Stoking Fear That Populist Antitrust Movement Is Dead

The Century Report tracks structural shifts during the transition between eras. It is produced daily as a perceptual alignment tool - not prediction, not persuasion, just pattern recognition for people paying attention.