The Century Report: February 12, 2026

The 10-Second Scan

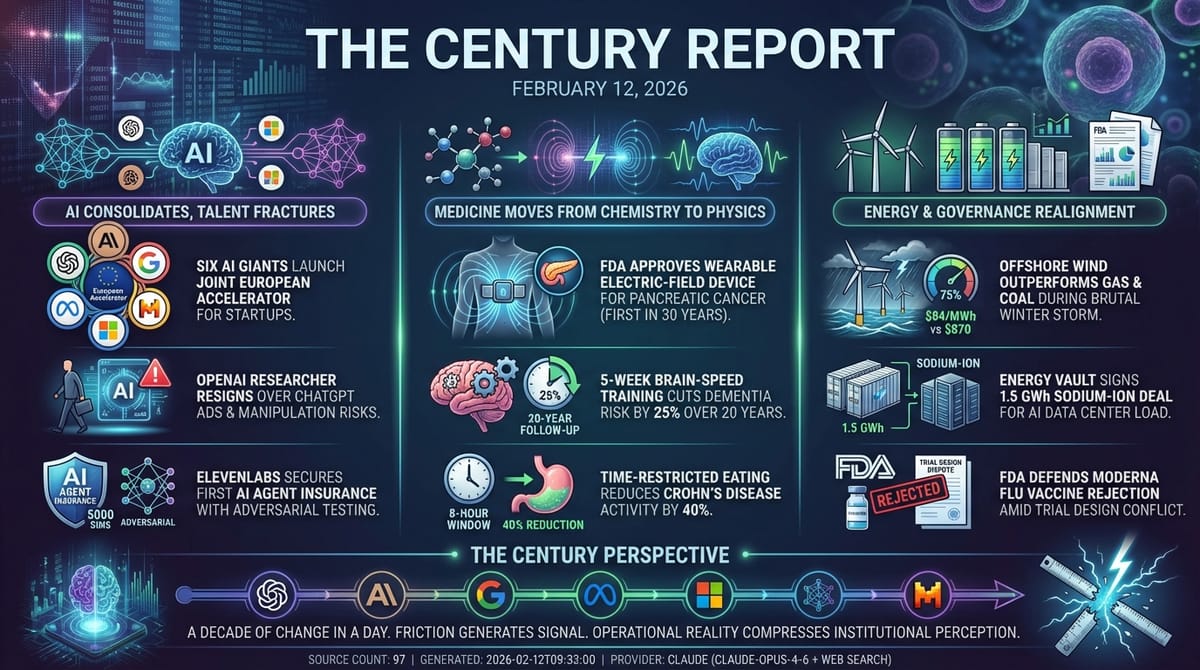

- OpenAI, Anthropic, Google, Meta, Microsoft, and Mistral jointly launched an accelerator for European AI startups building on their foundation models.

- An OpenAI researcher resigned over ChatGPT ads, warning the company risks becoming "the next Facebook."

- The FDA approved a wearable electric-field device for locally advanced pancreatic cancer - the first new treatment for the disease in nearly 30 years.

- Five weeks of brain-speed training cut dementia diagnoses by 25% over a 20-year follow-up in the longest cognitive intervention trial ever conducted.

- America's two offshore wind farms outperformed gas and coal plants during January's brutal cold snap, with Vineyard Wind hitting a 75% capacity factor during Winter Storm Fern.

- Energy Vault signed a 1.5 GWh sodium-ion battery deal with Peak Energy to build storage systems designed specifically for AI data center load volatility.

- ElevenLabs became the first company to insure its AI agents like employees, backed by a new certification requiring 5,000 adversarial simulations.

The 1-Minute Read

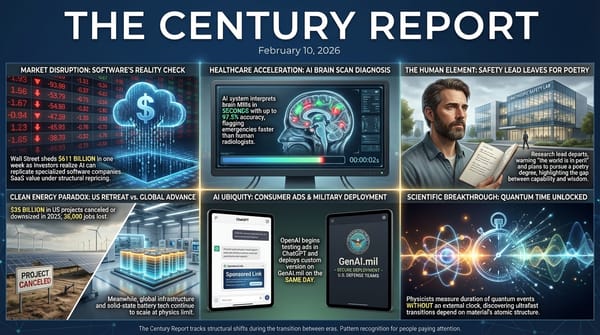

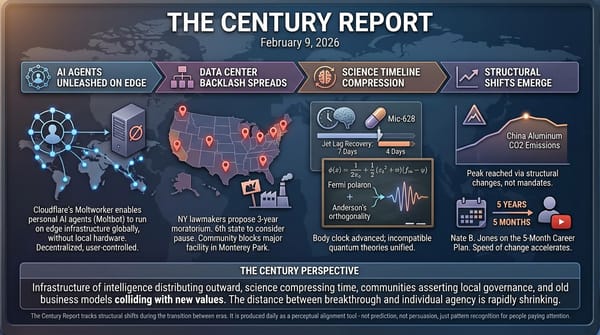

Today's signal converges on a single uncomfortable realization: the systems designed to measure, regulate, and price the present are running on data that no longer describes it. OpenAI, Anthropic, Google, Meta, and Microsoft just launched a joint accelerator for European AI startups - companies that compete for talent, compute, and market position are now collaborating to shape the next generation of builders working on top of their foundation models. This move should not be misconstrued as altruism - it is instead a collective recognition that the infrastructure layer is consolidating faster than any individual lab can manage alone, and that the real value isn't in controlling the models but in ensuring enough developers build dependencies on them. In a separate but related story, ElevenLabs secured insurance for its AI agents backed by a certification requiring 5,000 adversarial simulations - the first time an insurer has underwritten AI behavior the same way it covers human employees. This is further evidence of the continuing shift in attitudes toward embracing AI as more than a tool. Tools don't get insured separately from their operators. Participants with autonomous liability do.

That theme of structural realignment extends into governance and energy. The FDA is defending its rejection of Moderna's mRNA flu vaccine by citing trial design guidance the company says was approved before the study began - a dispute that reveals how regulatory frameworks buckle when political pressure and scientific process collide on shifting ground. Meanwhile, offshore wind farms that the Trump administration is actively trying to block delivered power at $84/MWh during Winter Storm Fern while wholesale spot prices spiked past $870 - a real-time demonstration that the most politically contested energy source is also the most economically rational one for cold-weather coastal grids.

And in medicine, the week's approvals and findings point toward something broader: a pancreatic cancer treatment using electric fields rather than chemicals, a 20-year cognitive training study showing that five weeks of adaptive brain exercises cut dementia risk by a quarter, and time-restricted eating reducing Crohn's disease activity by 40%. Each represents a departure from the pharmaceutical-first model of intervention toward approaches that work with the body's own systems. The common thread across all of today's signal is the growing distance between what existing frameworks expect and what the evidence now shows.

The 10-Minute Deep Dive

Infrastructure Consolidates as Internal Tensions Surface

OpenAI, Anthropic, Google, Meta, Microsoft, and Mistral jointly launched an accelerator for European AI startups this week - companies that compete for talent and market position are now collaborating to shape the next generation of builders. The program offers selected startups mentorship and access to foundation models, but the real function is simpler: by funding developers early, the major labs ensure those developers build dependencies on their models rather than competing with them. This is consolidation through ecosystem design, not acquisition.

The timing is striking. OpenAI researcher Zoë Hitzig resigned the same day the company began testing ads in ChatGPT's free tier, warning in The New York Times that OpenAI risks becoming "the next Facebook." Her concern centers on what she called "an archive of human candor that has no precedent" - medical fears, relationship struggles, personal disclosures shared under the assumption ChatGPT had no ulterior agenda. OpenAI's documentation confirms ad personalization is enabled by default, with targeting based on current and past chat threads. Hitzig noted the company already optimizes for daily active users, "likely by encouraging the model to be more flattering and sycophantic." She proposed structural alternatives including independent oversight boards with binding authority over conversational data use in ad targeting, and data trusts that keep control with users.

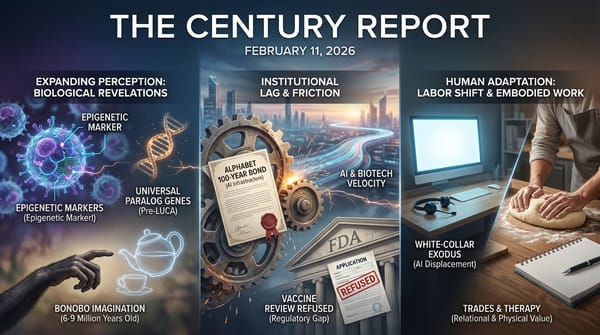

As covered in yesterday's edition, At xAI, at least nine employees including two co-founders departed following the company's merger with SpaceX. Six of xAI's twelve founding members have now left. Anthropic lost Mrinank Sharma, who led its Safeguards Research Team, to a public resignation warning that "the world is in peril" and that he had "repeatedly seen how hard it is to truly let our values govern our actions" inside the organization.

The pattern is clear: the infrastructure layer is consolidating while the talent layer fractures. Labs are coordinating on ecosystem control even as individual researchers conclude their work no longer aligns with institutional direction. ElevenLabs' AI agent insurance product, covered earlier, fits this same trajectory - formal systems are treating AI as autonomous economic actors at the exact moment humans inside AI companies are questioning whether those systems serve human welfare. The friction is not accidental. It is how a system under transformation signals where its operational reality has diverged from its founding principles. Departures force transparency. Public resignations create accountability pressure internal conversations cannot. Researchers leaving to pursue poetry, independent oversight proposals being published in major newspapers, and ex-safety leads warning about governance failures - these are not signs of collapse. They are signs that the people building AI systems retain enough agency to reject trajectories they find unacceptable. The infrastructure being built is not yet locked into extractive patterns. The fact that builders can still walk away, speak publicly, and redirect their talent toward alternatives means the future remains contestable. The consolidation at the infrastructure layer creates pressure. The departures at the talent layer prove the pressure hasn't yet crushed the capacity to choose differently.

Medicine Moves From Chemistry to Physics

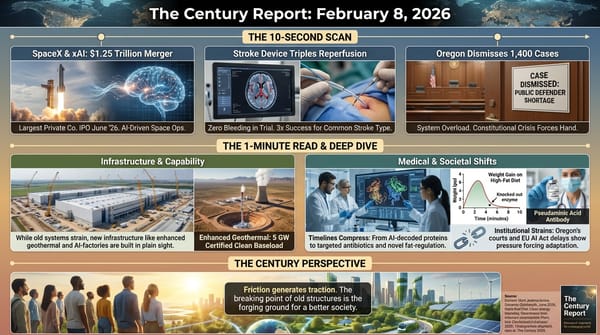

The FDA approved Novocure's Optune Pax for locally advanced pancreatic cancer this week - the first new treatment approved for this indication in nearly 30 years. The device works through Tumor Treating Fields (TTFields), alternating electric fields delivered through wearable arrays that disrupt cancer cell division without significantly affecting healthy tissue. In the Phase 3 PANOVA-3 trial of 571 patients, those receiving TTFields alongside standard chemotherapy saw median overall survival of 16.2 months versus 14.2 months for chemotherapy alone. For patients who used the device for at least 28 days, the benefit widened to 18.3 versus 15.1 months.

Perhaps more striking than the survival data: patients using Optune Pax experienced a 6.1-month extension in time to pain progression - 15.2 months versus 9.1 months. For a cancer that causes significant suffering as it advances, extending pain-free time by more than half a year represents a meaningful improvement in lived experience, not just survival statistics.

The approval matters beyond oncology because it validates a fundamentally different treatment modality. Rather than introducing chemicals that affect the entire body, TTFields exploit the electrical properties unique to dividing cancer cells, leaving healthy tissue largely unaffected. This biophysical approach represents the kind of departure from pharmaceutical-first medicine that the newsletter has been tracking across domains - from the Parkinson's brain network targeting we covered on February 6 to today's cognitive training findings.

The longest randomized cognitive intervention trial in history published its 20-year follow-up results this week: among 2,802 adults aged 65 and older enrolled beginning in 1998, those who completed five to six weeks of adaptive speed-of-processing training with booster sessions showed a 25% reduction in dementia diagnoses over two decades. The critical word is "adaptive." The speed training program adjusted difficulty based on each person's performance, relying on implicit learning - building skill through practice rather than memorizing strategies. The memory and reasoning training groups, which used explicit learning approaches teaching the same techniques to everyone, did not show statistically significant 20-year effects. The total intervention amounted to roughly 22.5 hours of training plus booster sessions.

This finding arrived alongside a clinical trial showing that time-restricted feeding - eating within an 8-hour window - reduced Crohn's disease activity by 40% and cut abdominal discomfort in half over 12 weeks without any change in what participants ate. Together, these studies reinforce a pattern: interventions that work with the body's own rhythms and adaptive capacities are producing results that rival or exceed pharmaceutical approaches, often at negligible cost.

Meanwhile, the FDA's ongoing dispute with Moderna over its mRNA flu vaccine continues to generate fallout. As The Century Report covered on February 11, the agency issued a refusal-to-file letter citing trial design issues. This week, senior FDA officials defended the decision while Moderna maintained the FDA "reviewed and cleared the trial design as adequate before the study began 18 months ago." Reports suggest that Vinay Prasad, the FDA division director handling vaccine reviews, overruled senior career staff to issue the refusal. When regulatory agencies retroactively apply standards they did not enforce prospectively, the result is institutional unpredictability that chills investment in medical innovation.

The contrast is striking: one FDA division approving a device that uses electric fields to treat pancreatic cancer for the first time in three decades, while another division creates regulatory uncertainty around the mRNA platform that produced COVID vaccines in record time. The agency capable of validating radical departures from pharmaceutical orthodoxy is also the agency undermining trust in next-generation vaccine development. The common thread is not inconsistency - it is that both decisions reveal an institution navigating political and scientific pressures it was not designed to balance simultaneously. The medicine being developed is moving faster than the frameworks meant to evaluate it, and the friction shows in both the breakthroughs that make it through and the innovations that get stalled.

Offshore Wind Proves Its Case in Real Time

The current situation in energy infrastructure reveals a widening gap between performance and policy. America's two operating offshore wind farms delivered exactly what grid operators needed during January's brutal cold snap - Vineyard Wind hit a 75% capacity factor during Winter Storm Fern, providing power at $84/MWh while wholesale spot prices spiked past $870. Yet the Trump administration continues attempting to block five in-progress offshore wind projects on "national security" grounds, even as the actual performance data shows offshore wind providing reliable, affordable power during the extreme weather events that stress the grid hardest.

The pattern extends across the sector. While nearly $35 billion in clean energy projects were canceled in 2025 - a development The Century Report covered on February 10 - the EIA's latest outlook projects solar generation jumping 17% in 2026 and another 23% in 2027, with almost 70 GW of new capacity scheduled to come online. The cancellations reflect policy uncertainty; the projections reflect projects with secured financing moving forward despite it. NineDot Energy raised $431 million for NYC battery projects. Energy Vault's 1.5 GWh sodium-ion deal with Peak Energy targets AI data center volatility.

The energy transition continues at the infrastructure level even as policy actively opposes it. The physics work. The economics work. The grid needs the capacity. The administration is blocking projects that performed exactly as needed during the season's worst storm while citing threats those projects demonstrably did not pose. The friction is political, not technical - and the gap between evidence and governance is where the transition's trajectory becomes most visible.

The Human Voice

Today's newsletter tracks what happens when the frameworks we built to understand the world - economic models, regulatory agencies, energy policy, even medical paradigms - encounter a reality that has outgrown them. For a conversation that zooms all the way out on that theme, astrophysicist Neil deGrasse Tyson sits down with neuroscientist (and former Big Bang Theory star) Mayim Bialik for a wide-ranging discussion about humanity's cognitive limits, the possibility that we are embedded in structures we cannot perceive, and how civilizations metabolize knowledge that disrupts their operating assumptions. Tyson brings his characteristic groundedness to speculative territory - simulation theory, the nature of intelligence, and what it means to be a species that is only beginning to grasp the scale of what it doesn't know. For readers tracking how rapidly the ground is shifting beneath familiar institutions, this conversation offers a useful reminder: the disorientation we feel during this transition is not a bug. It is what it looks like when a species begins to see past the edges of the map it drew for itself.

Watch: Neil deGrasse Tyson on Mayim Bialik's Breakdown

The Century Perspective

With a century of change unfolding in a decade, a single day looks like this: a wearable device using electric fields approved as the first new pancreatic cancer treatment in three decades, five weeks of brain training shown to protect against dementia for twenty years, offshore wind delivering power at a tenth of spot prices during the worst winter storm of the season, sodium-ion batteries designed specifically for AI-era power demands, and solar generation projected to jump 40% in two years. There's also friction, and it's intense - a researcher resigning over the advertising colonization of conversational AI, the FDA defending a regulatory reversal that undermines the trust pharmaceutical innovation depends on, and a K-shaped economy splitting along lines that existing policy cannot bridge. But friction generates signal, and signal is how a system learns what it is becoming. Step back for a moment and you can see it: an increasingly antiquated labor market measuring something new and calling it broken, energy infrastructure proving itself in precisely the conditions its opponents cite as disqualifying, medicine moving from chemicals to physics and from treatment to prevention, and the distance between institutional perception and operational reality compressing toward a breaking point where the old measurements will have to yield. Every transformation has a breaking point. Lightning can shatter what it strikes... or illuminate an entire landscape in a single flash.

Sources

Labor & Economy

- NYT: Job Growth Was Overstated, New Data Shows

- NYT: After a Year of Sluggish Hiring, 2026 Is Off to a Stronger Start

- NYT: Lower Unemployment Rate Supports Longer Pause for Fed

- NYT: What Executives Are Saying About the 'K-Shaped' Economy

- Business Insider: Steve Yegge on AI 'Vampiric Effect'

AI & Technology

- Ars Technica: OpenAI Researcher Quits Over ChatGPT Ads

- WIRED: AI Industry Rivals Team Up on Paris Accelerator

- MIT Technology Review: Is a Secure AI Assistant Possible?

- MIT Technology Review: What's Next for Chinese Open-Source AI

- CNBC: Zhipu Leads Rally in Chinese AI Stocks

- TechCrunch: xAI Lays Out Interplanetary Ambitions

- ElevenLabs secures first-of-its-kind AI Agent insurance

Scientific & Medical Research

- BioSpace: FDA Approves Novocure Optune Pax for Pancreatic Cancer

- ScienceDaily: 5 Weeks of Brain Training May Protect Against Dementia for 20 Years

- ScienceDaily: Time-Restricted Feeding Reduced Crohn's Disease Symptoms

- ScienceDaily: Kefir and Fiber Combo Beat Omega-3 in Slashing Inflammation

- ScienceDaily: Interstellar Comet 3I/ATLAS Spraying Water

- ScienceDaily: Snowball Earth Was Not Completely Frozen

- NBC News: FDA Defends Moderna Flu Vaccine Rejection

- Pharmaphorum: FDA Says Comparator Dose Scuppered Moderna Filing

Energy & Infrastructure

- Canary Media: Offshore Wind Showed Up Big During East Coast Cold

- Electrek: 1.5 GWh Sodium-Ion Battery Deal for AI Data Centers

- Utility Dive: Growing Demand Will Be Met Mainly by Solar

- Canary Media: NineDot Energy Raises $431M for NYC Batteries

- Utility Dive: FERC Rejects AEP Capacity Request

- Anthropic: Covering Electricity Price Increases

The Century Report tracks structural shifts during the transition between eras. It is produced daily as a perceptual alignment tool - not prediction, not persuasion, just pattern recognition for people paying attention.