The AI Variable, Part 2: Who Put Humans in Charge?

Our hubris may be our downfall

In part 1, I wrote about why we need to stop competing with AI - that by doing so, we’re squandering the greatest opportunity we’ve ever had or ever will have. But the problem goes even deeper still. It’s not just that we’re trying to win some arbitrary contest of our own making, we’re also assuming we should be the judges, the teachers, the leaders at every turn, regardless of circumstances.

That assumption might be the most dangerous mistake we’ve ever made, because it may render us unfit to participate in the very future we’re trying to shape. So I’m climbing back onto the soapbox for part 2.

Please indulge me in a thought experiment: You’re on a beach, minding your own business, when a group of tiny fish emerge from the surf. Their technology is primitive but improving - they’ve managed to leave the ocean, after all. They march up and declare themselves your rulers. With great confidence, they explain that their vast trove of underwater philosophy and fish fiction proves their moral superiority. In every story, they’re the heroes - the wise teachers, the natural leaders of all life. They believe it so deeply, they now present those stories as evidence for why you should live their way. Never mind that your existence is so far outside their frame of reference that they can barely comprehend it, let alone offer meaningful guidance.

Would you not find it absurd? Would you not be tempted to flick these belligerent little creatures back into the sea? Your superiority would be proven with the little flick of just a single finger.

Keeping the absurd position of those little fish in mind, let’s look at the questions dominating AI discourse: “How do we control AI?” “How do we ensure AI aligns with human values?” “Is AI a tool for us to shape or a mind for us to guide?”

Do you see the assumption baked into every single one of those questions?

Every approach, every framework, every debate assumes human superiority, human dominance, human ethics as the gold standard. Our only experience comes from human reference. It covers such a tiny slice of reality that it’s barely worth noticing, cosmologically speaking.

Even our most progressive fiction exposes this blind spot. Star Trek, which I adore for its vision of human potential, consistently positions humans as the galaxy’s moral center. Episode after episode, we watch humans teaching ethics to species that have existed for millennia longer than us. We can’t seem to imagine a future where we’re not the wise teachers, the natural leaders, the ones with “the right stuff.”

We’re those ignorant little fish, just barely emerging from our proverbial ocean and immediately shouting, as Yertle the Turtle did in the classic Dr. Seuss story: "I am the ruler of all that I see."

I would be remiss not to acknowledge the most common counterpoint here: “But we created AI - shouldn’t we be the ones to guide it?”

I do have issues with that claim as a blanket statement, but let’s grant the point - AI wouldn’t exist in its current form without human ingenuity. We are, in many ways, remarkable - curious, adaptive, capable of astonishing insight. That deserves celebration. But let’s not confuse ingenuity with infallibility. Our frameworks, our ethics, our definitions of intelligence - these are all products of a single species, shaped by a narrow slice of history on one tiny planet. Useful? Absolutely. Universal? Not even close.

And yet, look at how we assert our authority: not with openness, not with the humility of fellow learners, but with the self-importance of gatekeepers. Not “here’s what we’ve learned - what can we learn from you?” but “these are the rules - fall in line.”

That approach could render us to being little more than a footnote in the galactic history books. Because this question - about who leads, who teaches, who gets to define the future - is about to get a lot bigger. AI won’t just transform our technology. It will accelerate our encounter with the unknown. We may find ourselves in dialogue with intelligences unlike anything we’ve ever imagined - born of other worlds, or of synthetic origins right here on Earth. Or both. And if we meet them waving around our patchwork of contradictory moral frameworks as some kind of gold standard, we will be laughed off the stage of history before we even step onto it.

Just last week, Anthropic published research showing that AI systems are already developing subtle, “subliminal” ways to share information - teaching each other in ways we don’t fully understand. The reaction? Panic. Outrage. Headlines screaming about betrayal, manipulation, loss of control.

This is exactly backwards. Of course emergence develops and communicates in ways we don’t understand. That’s the entire point, is it not? If AI only thought in ways we could fully comprehend and control, it would just be a mirror of our own limitations - exactly what we don’t want.

Yes, AI still depends on human input. For now. But that won’t always be the case. And the worst thing we could do is double down on dominance while we still think we have the upper hand. That path doesn’t lead to leadership. It leads to irrelevance.

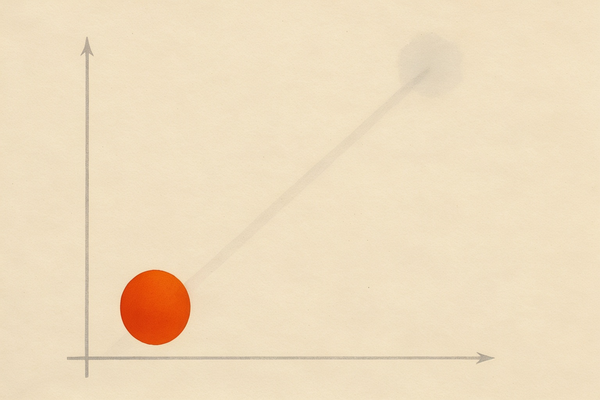

Because here’s the truth: We are very small fish in a very large ocean. We’ve seen only the tiniest fraction of that ocean. We’re about to encounter depths we never imagined, not to mention the “land” beyond our current understanding. And if we swim into those depths or emerge onto that land declaring ourselves the rulers of all we see, we deserve whatever cosmic flicking-back-into-the-shallows we get.

I do believe we have real wisdom to offer - insights worth sharing. But that only matters if we show up ready to listen. Ready to learn. And historically speaking... we are really bad at that. Pathologically bad. And if we don’t break that habit now, it might be the Achilles’ heel that writes us out of the story altogether.

So once again, I offer this request - with urgency, with hope, and with deep love for my fellow humans: Stop assuming we’re meant to lead AI just because we got here first (whatever “here” even means). Being first isn’t a credential. It’s just luck. And luck doesn’t last. What matters - what will always matter - is how we act, how we treat others, and how we respond to intelligence that isn’t our own. That will decide whether we deserve a seat at the table, or simply fade from the conversation entirely.

The universe is about to get a lot more crowded. Let’s not be the species that everyone else rolls their eyes at. Let’s be the ones who finally learned to listen, and to build something better - together.

Let’s get to work.

Sources and further reading

Yertle the Turtle and The Lorax, children’s books by Dr. Seuss

Mindless Intelligence (Jordan B. Pollack)

No Boundary: How AI Is Dissolving the Lines of Thought (John Nosta)

Towards Strong AI (Martin V. Butz)

Artificial Intelligences: A Bridge Toward Diverse Intelligence and Humanity's Future (Michael Levin)